Sensors are a powerful tool to automate process confirmation. Instead of manually clicking a button to confirm each completed step, a sensor can automatically detect that an action has taken place.

This enables hands-free operation and guided process flow, allowing operators to move through steps seamlessly and efficiently.

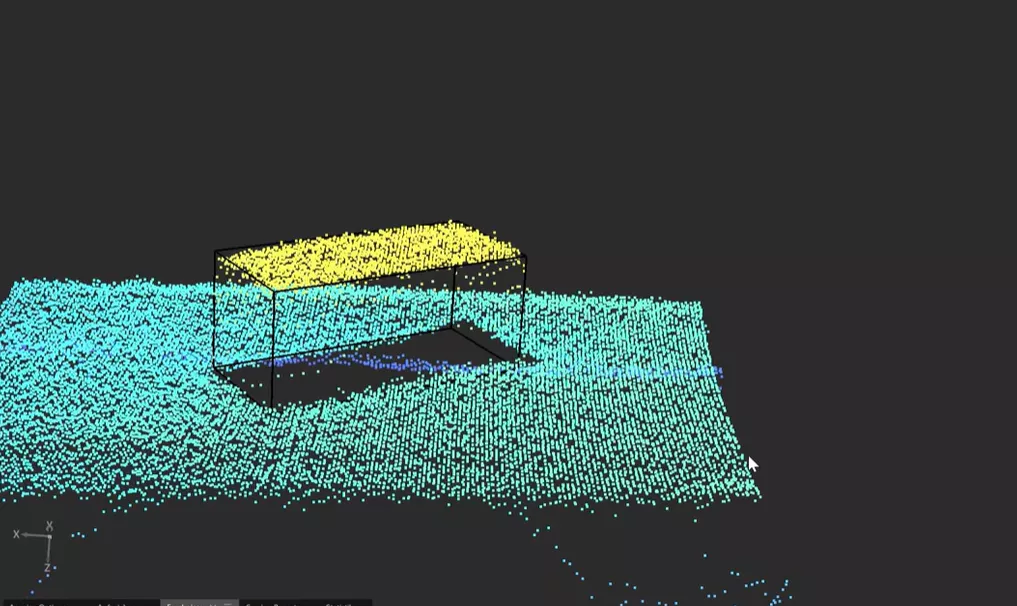

3D sensors measure the distance between the sensor and the nearest surface point point-by-point using the time-of-flight principle. This provides a height value for each pixel within the target frame, resulting in a depth map or 3D point cloud that represents the shape, contour, and spatial structure of the observed object or environment.

It is a powerful tool for detecting the presence or absence of objects based on their shape and contour data, enabling object recognition. Their ability to analyze depth and geometry makes them ideal for object tracking, and workflow automation within advanced operator guidance systems.

Hand gesture confirmation

Ideal for recognizing hand movements or confirming operator actions without physical contact.

Virtual confirmation

Define virtual buttons that allow operators to proceed through instructions simply by moving their hand within a sensor-defined zone.

Picking confirmation

Guide operators to the correct bin or component location and automatically confirm when the item has been picked.

While 3D sensors confirm that a process step has been performed, machine vision systems go further, validating operator actions and inspecting products for shape, color, irregularities, or other quality indicators.

Advantages

Hands-free operation - Operators can progress through steps without touching buttons. Perfect for tasks that require both hands to remain free.

Time savings - Eliminates the inefficiency of manually confirming each action, especially when confirmation buttons are not easily accessible.

Trade-offs

No inspection capabilities – Sensors detect motion or distance but cannot visually inspect parts.

Limited accuracy – They provide confirmation, not detailed analysis.

Not Self-learning – Sensor systems don’t adapt or improve based on outcomes.

No position control or automated adjustments – Sensors cannot correct or compensate for shifted parts or misalignment.

Advantages

Enhanced precision and accuracy - Computer algorithms continuously monitor and verify manual actions, ensuring consistent execution.

Self-learning capability - Vision systems can be trained to distinguish between “OK” and “Not OK” through pixel-based recognition and advanced measurement algorithms.

Reveal the Invisible - Detect what the human eye might miss, surface defects, irregularities, or subtle color deviations.

Position adjustment - Vision systems can automatically adjust for parts that are misaligned or slightly shifted, ensuring precise handling.

Trade-offs

Overkill for simple applications – When only basic gesture or presence confirmation is needed, full vision systems may be unnecessary.

Requires higher expertise – Implementation and calibration demand more setup time and technical knowledge.

Step into the future of smart guided manufacturing!